How to detect which language a text is written in? Or when science meets human!

May 13, 2007As I mentioned earlier in my spam attack analysis, I wanted to know which language spams I receive are written in. My first bruteforce-like idea was to take each word one by one, and search in english/french/german/… dictionaries whether the words were in. But with this approach I would miss all the conjugated verbs (until I had a really nice dictionary like the one I have now in firefox plugin). Then I remember that languages could differ in the distribution of their alphabetical letters, but well I had no statistics about that…

That was it for my own brainstorming, I decided to have a look at what google thinks about this problem. I firstly landed on some online language detector… The easy solution would have been to abuse this service which must have some cool algorithms, but well I needed to know what kind of algorithms it could be, and I didn’t want to rely on any thirdparty web service. Finally I read about Evaluation of Language Identification Methods, of which the abstract seemed perfect:

Language identification plays a major role in several Natural Language Processing applications. It is mostly used as an important preprocessing step. Various approaches have been made to master the task. Recognition rates have tremendously increased. Today identification rates of up to 99 % can be reached even for small input. The following paper will give an overview about the approaches, explain how they work, and comment on their accuracy. In the remainder of the paper, three freely available language identification programs are tested and evaluated.

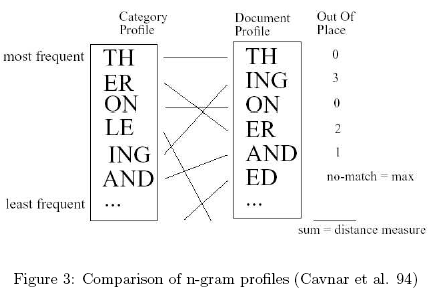

I found the N-gram approach on page 8 (chapter 4) rather interesting. The principle is to cut into defined pieces m long texts written in their respective language (english, french…), that we will call training texts, and count how much time each piece appeared; Do the same on the text you want to identify, and check the training text matching your text the best; This training text is most likely written in the same language as your text.

The pieces are the N-grams, ie for the word GARDEN the bi-grams (N=2) are: G, GA, AR, RD, DE, EN, N.

Now there are various way of finding the best matching text playing with the N-grams, distances, score…

I found an implementation from 1996 in C, here with sources. So I followed same algorithm and implemented it in Ruby. Those C sources reminded me of my C days where you had to implement your lists, hashes. Those sources are optimized for memory usage (10 years ago…)… At the end the Ruby code is a hundred line while the C was four times more, and the Ruby code is easier to read. Don’t take that as a demonstration, it is not!! I admit the C binary is maybe a bit faster (but not that much ;)). I’ll try to commit it on rubyforge when I have some time.

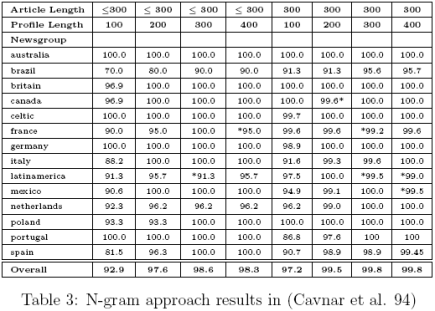

The results are excellent as shown in the paper:

Anyway actually in this story the most interesting was not the implementation but the method: It is funny that you can identify languages (so human population as well) by without requiring linguistic knowledge: ignoring grammar, senses of words (dictionary)… But by only analyzing letters and blocks of letters from Shakespeare or Baudelaire. N-grams can also be used in other areas, for example in music to predict which note is likely to follow.

[…] How to detect which language a text is written in? Or when science meets human! […]

This approach (N-gram) does not work for Most Asian languages.

Roshan, I think that depends on what you mean by Asian languages. I don’t know that it would be the best way of identifying Japanese or Korean: they could be identified far more easily through their unique script features (I’m simplifying a bit, but I doubt that there’ll be many people identifying Ryukyuan or Ainu).

As far as the Brahmic scripts are concerned, though, I’d be interested to know why N-grams don’t work. Devanagari, for example, is used to write a number of different languages, but the number of symbols is of the same order of magnitude as in the Latin alphabet. As long as they are coded properly for the algorithm and the implementation doesn’t assume one byte per character, why wouldn’t an N-gram approach work to distinguish, say, Hindi from Marathi?

Hi Roshan,

Indeed you’re right, it’s even said in the paper:

“One

disadvantage of their method has to be mentioned here. The N-gram approach will not be

able to work with Asian documents as it relies on correct tokenization. Tokenization for

Asian Languages, as stated before, is a hard task. To overcome this problem (Kikui 95)

introduced a statistical method which works bytebased and does not need tokenization

beforehand.”

Actually I don’t receive asian spam yet (friends of my do), did you work out that with the statistical method as suggested by the paper ?

[…] How to detect which language a text is written in? Or when science meets human! As I mentioned earlier in my spam attack analysis, I wanted to know which language spams I receive are written in. My […] […]

Hi guys,

Actually N-grams work fine for Indic languages. Remember that the Cavnar paper is from before Unicode days (1996), so when they’re talking about problems with “tokenization,” I suspect that they’re talking about encodings, as opposed to the other sense of tokenization, in the sense of splitting text up into words. For Indic languages especially, the encoding situation was pretty awful before Unicode came around — basically people would create fonts that used the ASCII character space, so as far as processing was concerned the machine thought it was still ASCII.

But where words stop matters not at all for N-gram-based language classification; with Unicode you can think more or less in terms of “letters,” and count those. So, if you have a good multilingual corpus in Unicode, and you train on that, you can build a system that identifies a boatload of languages.

That’s what I did here. And indeed it can distinguish Marathi from Hindi .

That said, there are a few problems:

It has trouble with Chinese and Japanese, but that’s because my samples are from that UDHR site, and they need to be bigger.

I did it with Python, which has pretty good Unicode support. Ruby’s Unicode support makes me weep, daily.

Would love to see your Ruby, sounds neat.

Hi Pat,

Thanks for your comment. Actually I didn’t bother about Unicode that much for the moment as I supposed my training and text would be in same encoding (which is not true). And you’re right about ruby’s Unicode support…

Are you using N-Gram algorithm aswell? I tested your script, it works well but it seems as if it really needs a lot of characters. Normally a dozen words already gives you a good result. Is it because you are using small training text? (I use 70KB raw training text) and how do you consider something as being ‘unknown’? Do you have a threshold? Else it should always return a solution (may it be right or wrong).

Hi Sébastien,

I’m not using the algorithm from the Cavnar paper, but the “raw data” is N-grams (bigrams and trigrams, actually).

You’re right about the document size not being big enough. As I mentioned, I just used what was in the UDHR corpus because it had a lot of languages, everything was utf-8, and it was really easy to process.

What I should really do is some proper testing, controlling for input corpus size, figuring out how big the models should be, what mix to use of bigrams and trigrams, etc. I imagine it could be tuned to work better with

By the way, I’ve got a bunch more links on this topic here:

http://del.icio.us/patfm/language_identification

(There are a bunch of Perl implementations worth looking at, for instance.)

Maybe the folks who are interested in this should try to collaborate on creating a language id gem of some kind, if we can figure out some way to hack around Ruby’s lousy Unicode support (heck, Rails manages to handle Unicode…). I know Paul knows a lot about this stuff (hey Paul =] )

-pat

Your comments are very much appreciated!

I myself started to ponder over language-detection using n-grams, when I wondered how DivX subtitle that can be uploaded to

http://www.opensubtitles.org/en

are detected and how the range of detectable languages could be increased.

I found a PERL version by Gertjan van Noord’s TextCat

http://www.let.rug.nl/~vannoord/TextCat/

and a PYTHON version by Thomas Mangin.

http://thomas.mangin.me.uk/software/python.html

but I had difficulties to encounter a PHP version…and that’s why I did it myself at

http://boxoffice.ch/pseudo/index.php

Since I’m definitely not experienced in writing any code I was surprised that three small functions together with the finger-prints of the languages do the job. There was no big issue about Asian (unicode) language-detection, since the php string funtiction go obiously bytewise (unless not told differently).

I put the code, demo, finger-prints and more on my website as well. It might be instructive for some.

Hi reto!

Thanks for sharing your experience! It’s always interesting to see the domains language detection can be applied to and the solutions chosen.

And sorry for the comment blocking… It seems as if Askimet is very restrictive at… spam-freeing comments.

It even blocks mine…

I bought a scarf the other day and it has some writing on it. I’m really curious as to what it says because I Don’t want to wear it if its some sort of satanic rambling. By looking at the print on it you’d say it was turkish or iranian or sopmething but I tried to translate from a few languages and still couldn’t find out what it means.

How can I go about finding out the meaning of this phrase?

By the way the writing reads ‘dariguguseman tujuhjemaatyalomsawah l’

[…] How to detect which language a text is written in? Or when science meets human! « The Nameless One […]

[…] How to detect which language a text is written in? Or when science meets human! « The Nameless One […]

I have made a language detector. It’s available here:

Language Detector

I have made a language detector. It’s available here:

Language Detector

[…] Check string language Also : https://tnlessone.wordpress.com/2007/…e-meets-human/ and more generally : http://www.google.fr/search?hl=fr&q=…+natural&meta= — Patrice […]

Here is my n-gram based language detector, written in ruby:

http://github.com/feedbackmine/language_detector/tree/master

I have been using it in production and it performs very well.

This n-gram based tool written in PHP detects a site language depending on its content (for a user specified URL)

http://www.site-language.com

Sometimes have problems with non utf-8 encodings, but mostly works ok.

This one worked awesome! http://www.site-language.com

Thanks

[…] how-to-detect-which-language-a-text-is-written-in-or-when-science-meets-human […]

[…] how-to-detect-which-language-a-text-is-written-in-or-when-science-meets-human […]

[…] how-to-detect-which-language-a-text-is-written-in-or-when-science-meets-human […]

This post is very interesting. I would like to do my own language detector, but, it seems a bit hard by now.

[…] how-to-detect-which-language-a-text-is-written-in-or-when-science-meets-human […]

“It is funny that you can identify languages (so human population as well) by without requiring linguistic knowledge: ignoring grammar, senses of words”

For this approach text was split to words, so some grammar was used. nevertheless if you took any computer language and made 3-grams from complete document it would still work, and be more obvious, with lisp having “)))” somewhere in top, and .*ML’s having “</".

As it goes this direction, has anyone tried to use 24-grams for binary data classification?

You can use http://detectlanguage.com for this purpose too. It’s a free API.

[…] any method, you’re just doing an educated guess. There are available some math related articles out there Tagged: .php /* * * CONFIGURATION VARIABLES: EDIT BEFORE PASTING INTO YOUR […]

I have written a web service (http://www.whatlanguage.net) that can identify 100+ languages. It can handle texts, websites and documents. It’s easy to use, accurate and fast.

Wonderful post however , I was wanting to know if you could write

a litte more on this topic? I’d be very grateful if you could elaborate a little bit further. Appreciate it!

[…] any method, you’re just doing an educated guess. There are available some math related articles out […]

Very useful article, but I’m looking for open source probject (language detection class) is available to download, does anyone know where can I find it?

[…] any method, you’re just doing an educated guess. There are available some math related articles out […]

[…] interesting topic, and various researches have been conducted trying to find a universal solution (example). Some of the approaches analyze the overall text or at least a sentence trying to find specific […]

[…] 无论采用哪种方法,您都只是在进行有根据的猜测。有一些可用的数学相关的文章在那里 […]